What is an autonomous system?

Autonomous driving gains more and more popularity since our possibilities increase ever year. More and more companies show their progress and some cars are even allowed to drive fully driverless in some countries. We want to prepare our members for this field of science and in addition we build a fast race car.

About our Autonomous System

Our driverless team is the newest subgroup of KaRaT. It was found to be able to attend the Formula Student Driverless. Since the Electronyte e21 is our first driverless car, we are still at the beginning of our journey and hence have much room for experimentation. We are glad that we have found many generous sponsors who provide us with the newest technology. Without this support we would not be able to build a driverless race car. In the following, we would like to give you an overview about some of the things we are working on.

what we do

Design and programming

The first step is always to analyze the problem and figuring out how to solve it. E.g. how do we brake, how do we steer? All of this are questions you do not have to ask yourself if you have a driver because these actions are perfromed by him. If you came up with a solution it is time to code it.

simulation

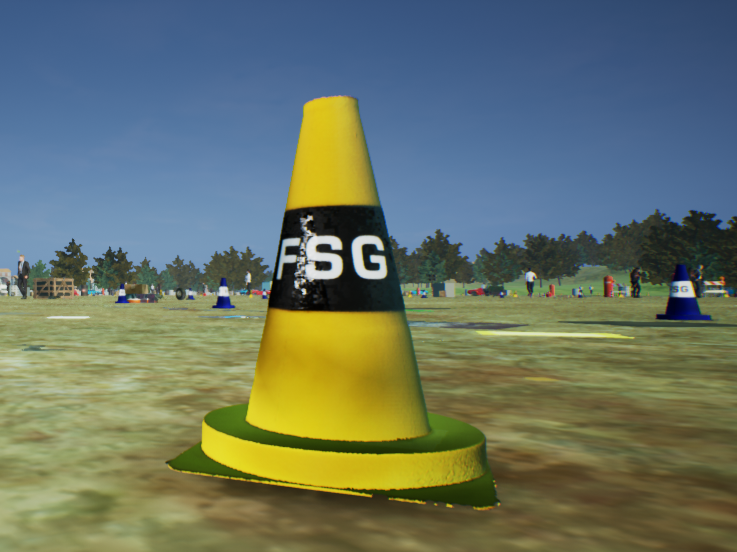

Our simulation in the Unreal Engine if a big piece in our workflow. Testing on a real vehicle takes a lot of time because it needs a lot of preparation. Because of this we built a very realistic simulation in which all of our pieces can be tested before putting it on the vehicle.

assembly and testing

After everything runs in the simulation it is time to bring everything together. At first we test our system on our modular test car and if the tests succeed the parts are brought onto the actual car.

Technical highlights

Here you can find the things the subteam is very proud of. Many of these are only possible because of our sponsors which are included near the parts.

Camera object detection

The eyes of the car

We use up to three mvBlueFOX3-2024C-1112 cameras from Matrix Vision simultaneously for detecting and distinguishing blue and yellow cones. The image quality they provide allows us to use a convolutional neural network based on CenterNet to spot the cones. This neural network has been trained using images from our Unreal Engine simulation.

GPS & IMU

Our GPS and IMU provide us with information about the movement of the car

Even though GPS and IMU (Inertial Measurement Unit) don’t give you as much visual information as camera and LiDAR do, they are equally important. They supply our Extended Kalman Filter with the necessary pose and movement data, we strongly rely on.

lidar sensor

Light detection and ranging sensor

The cameras may be superior in terms of object detection and classification, but our Velodyne VLP-16 LiDAR has also an advantage we utilize. The point cloud it generates provides for each point very precise coordinates in three-dimensional space. To combine the strengths of both devices, we have fused them and hence we can use the cameras for object detection and the LiDAR for distance computation.

- Distance Estimation

- Object Detection

- 3D Point Cloud

Simulation

Simulation is a key part in a fast and reliable testing process

The simulation, which we have built using the Unreal Engine, serves different purposes. Since we use a neural network for detecting cones, we need data to train it. We have not the capacities to do it only with our testing vehicle, so we use the simulation to record synthetic images. Furthermore, we use it to test the path planning, which is way more convenient than to do it in reality. To some extent we are also able to simulate the sensors we use.

EBS

Our emergency brake system which replaces the driver’s task of braking

Since the car has no driver there has to be a system which shuts down the car no matter what happens. To achieve this goal we use a passive system which breaks hard if an error occurs or if we want it to. This system has to be 100% secure and is checked automatically everytime the car starts.